There are Some Reasons Why Google Isn’t Indexing Your Site

1. No domain

First, you don’t have a domain name, which Google won’t index. This could be due to an incorrect URL or a WordPress setting.

Fixing this is easy.

Check if your web URL starts with “https://XXX.XXX,” which suggests someone may be entering in an IP address instead of a domain name.

Your IP redirection may be incorrect.

Adding 301 redirects from WWW pages to their relevant domains can fix this issue. We want individuals who search for [yoursitehere] to land on your domain name.

Domain names are significant. This is required to rank on Google.

2. Mobile-Unfriendly Site

Mobile-first indexing by Google requires a mobile-friendly website.

If your website isn’t optimized for mobile devices, you’ll lose rankings and traffic.

Adding flexible design ideas like fluid grids and CSS Media Queries can help users discover what they need without navigation issues SEO service in india.

First, run Google’s Mobile-Friendly Testing Tool on your site.

If your site doesn’t “pass,” make it mobile-friendly.

3. You’re Using Complex Coding for Google

Complex coding won’t be indexed by Google. It doesn’t matter what language is used as long as the settings cause crawling and indexing issues.

If this is a problem, run your site through Google’s Mobile-Friendly Testing Tool (and make any fixes that might need to be made).

If your website doesn’t meet their standards, they offer plenty of tools with design guidelines for responsive websites.

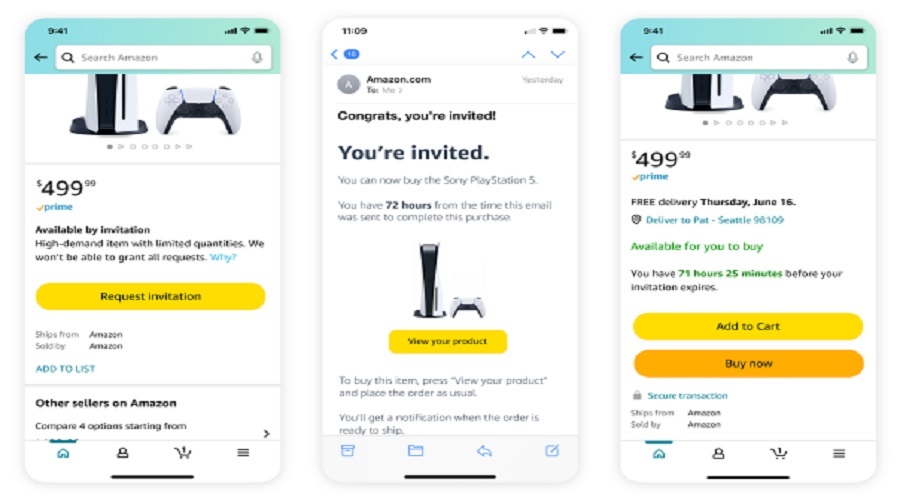

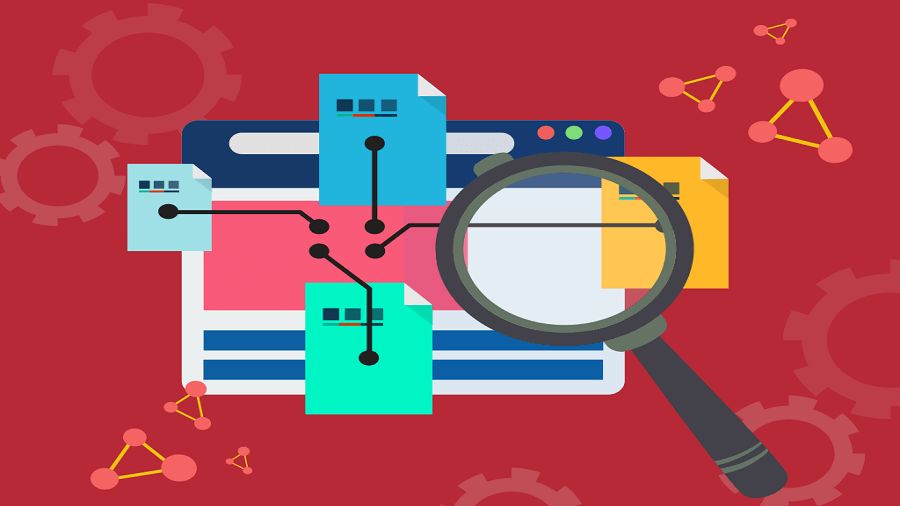

In Minutes, Scan Your Website

Generate your reports of your site’s crawlability, internal linking, speed & performance, and more.

Advertisement

4. Slow Website

Google won’t priorities slow-loading sites in its index. Many reasons can cause your site to load slowly.

Too much content on the page or an old server with limited resources could be the blame.

Solutions:

Google Page Speed Insights is one of my favorite tools in recent years. It helps me identify which parts of a website need immediate speed improvements. The programmed examines your webpage against five performance best practises (that are critical for quicker loading sites), such as decreasing connections, reducing payload size, exploiting browser caching, etc., and gives you advice on how to enhance your site.

Use webpagetest.org This tool checks if your website loads quickly. It lets you see which web parts are generating problems. Their waterfall can uncover page performance issues before they cause difficulties.

Use Google’s PageSpeed insights to optimize site load times. Consider a new hosting plan with more resources (dedicated servers are better than shared ones) or a CDN solution that serves static material from different global caches.

Page speed should be at least 70. Ideally, 100.

Check out SEJ’s Core Web Vitals ebook if you have queries about page speed.

5. Your Site Has Little Well-Written Content

Google rewards well-written content. If your material doesn’t match your competition’s, you may have trouble breaking the top 50.

Less-than-1,000-word material performs worse, in our experience.

Do we write content? Nope. Does word count matter? Likewise.

When deciding what to do in a competition, well-written information is crucial.

Your website’s content must be useful. It must answer questions, provide information, or provide a unique perspective from other sites in your niche.

Google will identify a site with greater content if it doesn’t match these rules.

If your website isn’t ranking well in Google search results for some keywords despite following SEO recommended practices (Hint: Your Content), one problem may be thin pages with fewer than 100 words.

Thin pages might cause indexing troubles because they lack unique material and don’t meet quality standards.

Your website isn’t user-friendly or engaging.

Good SEO requires a user-friendly, engaging website. Google will rank your site higher if users can discover what they need and traverse it easily.

Google doesn’t want consumers spending too much time on a slow-loading, confusing, or distracting page (like ads above the fold).

If you simply list one product per category, Google may not like your content. It’s crucial to target keywords in each post and link similar postings to other relevant articles/pages.

Shares your blog? Your content wows? If so, Google may have ceased indexing your site.

If someone links directly to a product page instead of using relative keywords like “buy,” “purchase,” etc., then other pages may link back to that product incorrectly.

Make sure all category products are also in subcategories so users can quickly make purchases without navigating complex linking hierarchies.

7. Redirect Loop

Redirect loops prevent indexing. Common typos create these errors, which can be rectified as follows:

Find the looping page. If you’re using WordPress, look for “Redirect 301” in the HTML code of one of your articles or in an. htaccess file to determine which page it’s redirecting from. Repair any 302 redirects to 301.

Search for “redirect” in Windows Explorer (or Command + F on a Mac) to discover the problem file.

Fix mistakes so there’s no duplicate URL pointing back to itself, then apply redirection code like below:

Google Search Console doesn’t always show 404s. You may find 404 and other error codes with Screaming Frog.

Using Google Search Console on-site, crawl and resubmit the site for indexing if everything appears good. Check Google Search Console once a week for fresh warnings.

Google can’t update their indexes every day, but they try every few hours, so your changed content may not come up immediately. Wait! Indexing should happen soon.

8. You’re Using Plugins That Block Googlebot

Robots.txt is one such plugin. If you noindex your site with this plugin, Googlebot won’t crawl it

Create a robots.txt file.

Set this to public so crawlers may access it.

Make sure these lines aren’t in robots.txt:

* Disallow:/

The forward slash signifies the robots.txt file blocks all root-folder pages. Robots.txt should look like this:

With the disallow line blank, crawlers can crawl and index every page on your site (provided no pages are noindexed).